Contextual AI presents GLM, a grounded language model set to exceed the accuracy of GPT-4o

Contextual AI presents GLM, a grounded language model set to exceed the accuracy of GPT-4o

The artificial intelligence landscape is evolving rapidly, with enterprises demanding more from their AI solutions than ever before. As businesses increasingly rely on AI to drive decision-making, streamline operations, and enhance customer experiences, the need for accuracy and reliability has become paramount. Traditional language models, while impressive in their general capabilities, often fall short when it comes to handling precision-critical tasks in high-stakes business environments.

Enter Contextual AI's Grounded Language Model (GLM), a groundbreaking solution tailored specifically for enterprise needs. This innovative AI model promises to address the longstanding challenges of factual accuracy and reliability that have plagued many AI implementations in the business world.

Contextual AI: Pioneering the Future of Enterprise AI

A Vision Rooted in Expertise

Contextual AI isn't just another AI startup jumping on the bandwagon. Founded by the pioneers of retrieval-augmented generation (RAG) technology, the company brings a wealth of expertise to the table. Douwe Kiela, CEO and co-founder of Contextual AI, played a pivotal role in the development of RAG during his time at Meta's Fundamental AI Research (FAIR) team.

“We knew that part of the solution would be a technique called RAG — retrieval-augmented generation,” Kiela explained in a recent interview. “And we knew that because I was a co-inventor of RAG. What this company is about is really about doing RAG the right way, to kind of the next level of doing RAG.”

This deep understanding of RAG technology forms the foundation of Contextual AI's approach to enterprise AI solutions. By focusing on the specific needs of businesses operating in regulated and high-stakes environments, the company has positioned itself as a leader in the development of AI models that prioritize accuracy and reliability above all else.

Tackling the Hallucination Problem Head-On

One of the most significant challenges facing AI in enterprise settings is the issue of hallucinations — instances where AI models generate plausible-sounding but factually incorrect information. These hallucinations can have serious consequences in business environments, potentially leading to misinformed decisions, compliance issues, or damage to a company's reputation.

Contextual AI has made it its mission to address this problem by developing AI models that are specifically designed to minimize hallucinations and prioritize factual accuracy. The GLM represents the culmination of this effort, offering a level of precision and reliability that sets it apart from general-purpose language models.

Groundedness: The Core Pillar of GLM's Success

Defining Groundedness in AI

At the heart of Contextual AI's approach is the concept of “groundedness.” In the context of AI-generated responses, groundedness refers to the degree to which an AI model's output is directly supported by and accurately reflects the information it has been provided.

A grounded AI model, like the GLM, is designed to adhere strictly to the knowledge sources it has been given. When presented with a query and a set of relevant documents, a truly grounded model will either respond with information that is directly derived from those documents or, if the information is not available, clearly state that it cannot answer the question.

This approach stands in stark contrast to many general-purpose language models, which often rely heavily on their pre-trained knowledge and may generate responses that, while plausible, are not necessarily grounded in the specific information provided for a given task.

The GLM Advantage: Precision Over Creativity

The GLM's focus on groundedness gives it a significant advantage in enterprise settings where factual accuracy is critical. Unlike models like GPT-4o, which are designed to handle a wide range of tasks from creative writing to technical analysis, the GLM is purpose-built for scenarios where precision and reliability are non-negotiable.

Kiela emphasizes this point: “If you have a RAG problem and you're in an enterprise setting in a highly regulated industry, you have no tolerance whatsoever for hallucination. The same general-purpose language model that is useful for the marketing department is not what you want in an enterprise setting where you are much more sensitive to mistakes.”

This laser focus on groundedness allows the GLM to excel in tasks that require a high degree of factual accuracy, making it an ideal choice for industries such as finance, healthcare, and telecommunications, where even small errors can have significant consequences.

The FACTS Benchmark: Proving GLM's Superiority in Factual Accuracy

Setting a New Standard for AI Performance

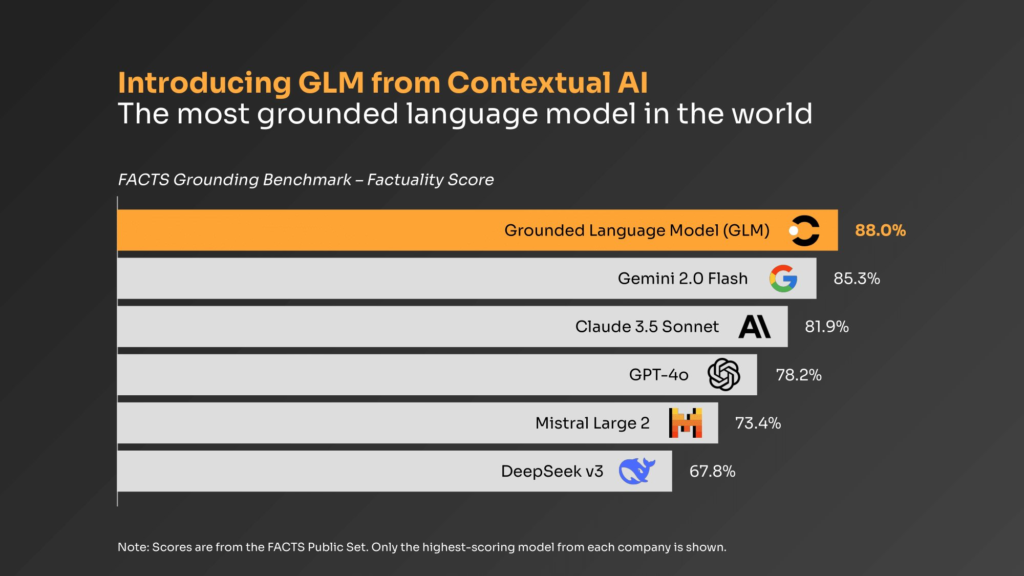

To demonstrate the GLM's capabilities, Contextual AI put it to the test using the FACTS benchmark, widely recognized as the industry standard for evaluating the factual accuracy of AI models. The results were nothing short of impressive.

The GLM achieved a factuality score of 88% on the FACTS benchmark, significantly outperforming its competitors:

- Google's Gemini 2.0 Flash: 84.6%

- Anthropic's Claude 3.5 Sonnet: 79.4%

- OpenAI's GPT-4o: 78.8%

These results are more than just numbers; they represent a significant leap forward in the quest for reliable AI solutions in enterprise settings. The GLM's performance on this benchmark underscores its potential to transform how businesses approach AI implementation, particularly in scenarios where factual accuracy is paramount.

Why FACTS Matters for Enterprise AI Adoption

The FACTS benchmark is designed to evaluate an AI model's ability to generate factually accurate responses across a wide range of domains and tasks. For enterprises considering AI adoption, performance on this benchmark provides a crucial indicator of a model's reliability and suitability for real-world applications.

By outperforming leading models from tech giants like Google, Anthropic, and OpenAI, the GLM has positioned itself as a frontrunner in the race to develop AI solutions that businesses can truly rely on. This achievement is likely to accelerate enterprise AI adoption, particularly in industries where the stakes are high and the margin for error is slim.

Breaking the Cycle of Hallucination in AI

The Hallucination Problem: A Major Roadblock for Enterprise AI

AI hallucinations have long been a thorn in the side of businesses looking to leverage AI technology. These instances of AI-generated misinformation can occur even when a model is provided with accurate source material, as general-purpose models often prioritize their pre-trained knowledge over specific retrieved information.

The consequences of these hallucinations in business settings can be severe. From customer service chatbots providing incorrect policy information to financial analysis tools generating inaccurate market predictions, the potential for AI-induced errors has made many enterprises wary of fully embracing AI technology.

GLM's Approach: Prioritizing Precision and Traceability

The GLM tackles the hallucination problem head-on by fundamentally changing how the AI model processes and generates information. Instead of relying heavily on pre-trained knowledge, the GLM is designed to prioritize the specific information it has been provided for each task.

This approach ensures that the model's responses are directly traceable to the source material, significantly reducing the risk of hallucinations. When the GLM generates a response, users can be confident that the information is grounded in the provided context, rather than being a product of the model's general knowledge.

Inline Attributions: Building Trust Through Transparency

Citing Sources in Real-Time

One of the GLM's most innovative features is its ability to provide inline attributions. As the model generates responses, it simultaneously cites the sources of the information it's using, integrating these citations directly into the text.

This real-time attribution system serves several crucial functions:

- It enhances transparency by allowing users to see exactly where each piece of information is coming from.

- It builds trust by demonstrating that the model is adhering to the provided information sources.

- It facilitates fact-checking and verification, crucial in regulated industries.

The Enterprise Advantage of Inline Attributions

For businesses operating in regulated industries or dealing with sensitive information, the ability to trace AI-generated content back to its source is invaluable. Inline attributions provide an audit trail that can be crucial for compliance purposes, risk management, and decision-making processes.

This feature sets the GLM apart from models that rely solely on parametric knowledge, offering a level of accountability that is essential in high-stakes business environments. Whether it's generating reports for regulatory bodies, providing customer support in the financial sector, or assisting with medical research, the GLM's inline attributions ensure that every piece of information can be verified and traced back to its origin.

RAG 2.0: Revolutionizing Information Retrieval

Beyond Traditional RAG Systems

While retrieval-augmented generation (RAG) has been a game-changer in the world of AI, Contextual AI has taken the concept to new heights with what they call “RAG 2.0.” This advanced approach moves beyond simply connecting off-the-shelf components and instead focuses on creating a cohesive, optimized system for information retrieval and generation.

Traditional RAG systems often suffer from what Contextual AI describes as a “Frankenstein's monster” problem: individual components work technically, but the overall system is far from optimal. RAG 2.0 addresses this by jointly optimizing all components of the system, from retrieval to generation.

The Components of RAG 2.0

Contextual AI's RAG 2.0 system includes several key components:

- Intelligent Retrieval: A “mixture-of-retrievers” component that analyzes the question and plans a strategy for retrieval.

- Advanced Re-ranking: What Kiela calls “the best re-ranker in the world,” which prioritizes the most relevant information before passing it to the language model.

- Grounded Generation: The GLM itself, which takes the retrieved and re-ranked information to generate accurate, grounded responses.

By integrating these components and optimizing them as a unified system, RAG 2.0 achieves a level of performance that surpasses traditional RAG implementations.

GLM's Multimodal Capabilities: Beyond Plain Text

Expanding the Scope of AI Understanding

While the GLM's text processing capabilities are impressive on their own, Contextual AI has pushed the boundaries further by incorporating multimodal content processing. The model can now handle a variety of data types, including:

- Charts and diagrams

- Structured data from databases

- Complex visualizations, such as circuit diagrams

This multimodal capability is particularly relevant for industries that rely on a mix of textual and visual information. For example, in the semiconductor industry, the ability to process circuit diagrams alongside textual specifications can significantly enhance the AI's utility.

Bridging Structured and Unstructured Data

One of the most exciting aspects of the GLM's multimodal capabilities is its ability to work at the intersection of structured and unstructured data. This is crucial for many enterprise applications where information is spread across various formats and systems.

Kiela highlights this as a key focus area: “What I'm mostly excited about is really this intersection of structured and unstructured data. Most of the really exciting problems in large enterprises are smack bang at the intersection of structured and unstructured, where you have some database records, some transactions, maybe some policy documents, maybe a bunch of other things.”

By integrating with popular data platforms like BigQuery, Snowflake, Redshift, and Postgres, the GLM can provide insights that draw from both structured databases and unstructured text documents, offering a more comprehensive view of the available information.

Enterprise Applications of the GLM: Precision Where It Matters Most

Tailored Solutions for High-Stakes Industries

The GLM's focus on accuracy and groundedness makes it particularly well-suited for industries where precision is non-negotiable. Some key areas where the GLM can make a significant impact include:

- Finance: Ensuring compliance with regulations, generating accurate reports, and providing reliable market analyses.

- Healthcare: Assisting with medical research, patient record management, and treatment recommendations.

- Telecommunications: Managing complex network infrastructures, troubleshooting technical issues, and ensuring regulatory compliance.

In these industries and others, the GLM's ability to stick strictly to provided information sources while still offering intelligent, context-aware responses can be a game-changer.

Adapting to Complex Enterprise Environments

One of the challenges in enterprise AI adoption is the complexity and “noisiness” of real-world business data. The GLM has been designed with this in mind, capable of handling the messy, sometimes contradictory information that often exists in large organizations.

By leveraging its advanced retrieval and re-ranking capabilities, the GLM can sift through complex datasets to find the most relevant and accurate information. This ability to adapt to and make sense of complex enterprise environments sets it apart from more rigid AI solutions.

Avoiding Commentary: When Precision Trumps Conversation

The Power of Saying “I Don't Know”

One of the GLM's most valuable features, particularly in enterprise settings, is its ability to avoid unnecessary commentary. Through the use of an “avoid_commentary” flag, users can instruct the model to stick strictly to factual information derived from the provided sources.

This feature is crucial in scenarios where any form of speculation or extrapolation could lead to serious consequences. By clearly indicating when it doesn't have enough information to answer a question, the GLM helps prevent the spread of misinformation and reduces the risk of AI-induced errors.

Balancing Precision and Helpfulness

While the ability to avoid commentary is a powerful tool, Contextual AI recognizes that there are scenarios where some level of conversational output can be beneficial. The GLM allows users to fine-tune this balance, enabling them to choose between strict factual responses and more conversational interactions depending on the specific use case.

This flexibility makes the GLM adaptable to a wide range of enterprise needs, from highly regulated environments where every word matters to more general applications where a conversational tone can enhance user engagement.

Getting Started with GLM: Accessibility Meets Enterprise-Grade Solutions

Free Access for Developers

Contextual AI has made it easy for developers to get started with the GLM, offering free access for the first 1 million input and output tokens. This generous free tier allows developers to explore the model's capabilities and integrate it into their projects without any upfront cost.

Key features of the free access include:

- Access to the /generate standalone API

- Comprehensive documentation and code examples

- SDKs for popular programming languages

This approach allows developers to test the GLM's capabilities in real-world scenarios before committing to a full implementation.

Scaling Up: Enterprise-Grade Solutions

For businesses looking to implement the GLM at scale, Contextual AI offers custom pricing and rate limits tailored to specific use cases. This flexibility ensures that enterprises of all sizes can benefit from the GLM's capabilities without being constrained by one-size-fits-all pricing models.

Enterprise customers also benefit from:

- Dedicated support for integrating the GLM into existing workflows

- Access to Contextual AI's full platform for optimizing RAG pipelines

- Customization options to meet specific industry requirements

By offering both accessible entry points for developers and scalable solutions for enterprises, Contextual AI is positioning the GLM as a versatile tool that can grow alongside businesses' AI needs.

Pioneering a New Standard in AI Accuracy

The launch of Contextual AI's Grounded Language Model represents a significant milestone in the evolution of enterprise AI. By prioritizing accuracy, transparency, and reliability, the GLM sets a new benchmark for what businesses can expect from their AI solutions.

As organizations continue to grapple with the challenges of implementing AI in high-stakes environments, tools like the GLM offer a path forward. By providing a model that businesses can truly rely on, Contextual AI is helping to unlock the full potential of AI in enterprise settings.

The GLM's impressive performance on the FACTS benchmark, coupled with its innovative features like inline attributions and multimodal capabilities, positions it as a leader in the next generation of AI models. As businesses increasingly demand AI solutions that can deliver both intelligence and reliability, the GLM stands ready to meet that need.

The Future of Enterprise AI: Precision, Trust, and Scalability

As we look to the future of enterprise AI, the principles embodied by the GLM — groundedness, transparency, and adaptability — are likely to become increasingly important. The ability to deploy AI solutions that can be trusted to provide accurate, verifiable information will be crucial as businesses seek to integrate AI more deeply into their operations.

The GLM's success also highlights the growing importance of specialized AI models tailored to specific industry needs. While general-purpose models will continue to have their place, the demand for highly accurate, domain-specific AI solutions is likely to grow, particularly in regulated industries.

For businesses looking to stay ahead of the curve in AI adoption, exploring solutions like the GLM that prioritize accuracy and reliability is becoming increasingly important. As the AI landscape continues to evolve, tools that can deliver both intelligence and trustworthiness will be key to unlocking the full potential of AI in enterprise settings.

Ready to experience the power of truly grounded AI for your enterprise? Try out Contextual AI's GLM today and discover how precision-driven AI can transform your business operations.

More Articles for you

- WorkForceAi Review: This powerful AI platform empowers users to generate content, craft high-quality videos, produce AI voiceovers, automate tasks, and build AI chatbots and assistants

- Dubbify AI Review: An AI video platform that lets you paste any video URL to quickly localize, translate, and dub it into any desired language

- The Power of Gratitude: A Strategic Advantage in Business Success

- Ignite Your Business Growth: A Journey with Harrell Howard’s Game-Changing Playbook

- AI Teachify Review: Create Exceptional AI Courses 10 Times Faster with No Camera, No Course Creation, No Voice Recording, No Cost, and No Fancy Tools

- Femme Review: Create and monetize AI-powered supermodels. Say goodbye to expensive influencers and models.

- SmartLocal AI Review: Launch Your Automated SaaS in 3 Clicks to Build, Host, and Sell Websites to Local Businesses.